This article is reprinted from The Best of the 2020 Teaching Professor Conference. You can learn about this year’s virtual conference here.

Like many faculty members, I started teaching as a subject matter expert without a formal background in education. My examination questions were either based on questions I recall from my personal experience as an undergraduate or questions provided to me by more senior faculty. Since a poorly written exam could mean the difference between a student passing or failing my class, I wanted to learn more about exam design. What makes a good exam question? A good exam?

Based on my review of the literature, this article summarizes best practices to help you improve the quality of your exam questions and consequently your exams. The focus is on the interpretation of the item analysis reports generated by your institution’s learning management system (LMS) so you can look beyond median student scores to determine the effectiveness of your exam. I’ll also provide suggestions to help you develop better test questions.

Why do faculty dislike multiple choice questions?

Many faculty don’t like multiple-choice questions. When asked why they don’t use them, they respond, “They’re too easy,” “They don’t test subjects in depth,” or “They’re unfair to non-native speakers of English.”

In many instances, these criticisms are fair. At times, a case study or a task-based simulation will do a better job of assessing student learning. But by following the best practices discussed below, you can use the multiple-choice format to create effective questions that you can evaluate using item analysis.

What makes a good exam?

A good exam is fair; your students should feel that you assessed them on the content you taught them and expected them to learn. Exam questions should be appropriately difficult; they should challenge students without making the exam impossible.

According to Butler (2018),

The primary goal of assessment is to measure the extent to which students have acquired the skills and knowledge that form the learning objectives of an educational experience . . . To do so effectively, a test needs to differentiate students who have greater mastery of the to-be-learned skills and knowledge from students who have less mastery, which is referred to as discriminability.

Finally, an effective assessment should be both reliable and valid.

A test is reliable if test writers possessing similar subject matter knowledge earn similar marks on an examination. A test is valid if it measures what it intends or claims to measure. Reliability and validity measure different things. A ruler that was printed incorrectly, with each centimeter being slightly too long, would reliably measure the same distance each time. But the measurement would lack validity as the ruler does not measure the distance in centimeters.

Using item analysis to evaluate exam questions

LMSs automatically generate statistics you can use to improve your examinations. Item analysis will enable you to assess the validity and reliability of your exam and the effectiveness of your distractors (the incorrect options in a multiple-choice question).

There are three parts to item analysis: assessing item difficulty, assessing question discrimination, and analyzing distractors.

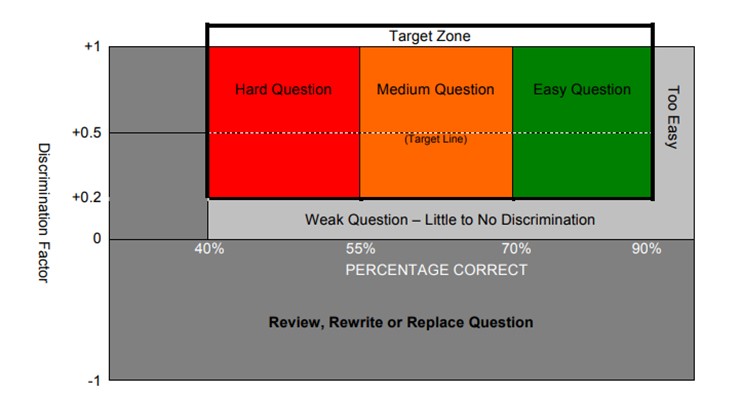

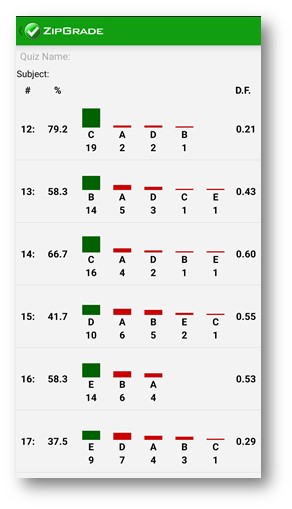

Figure 1. From http://www.gravitykills.net/Directory/Assessment/Item_Analysis_with_ZipGrade.pdf

1. Assessing item difficulty

The question difficulty is the proportion of examinees who answered the question correctly. A question difficulty of 0.3 or less means that fewer than 30 percent of examinees answered it correctly. Why did so many students get it wrong? Was there something about the wording that made it difficult? Was tricky working used? Was the question too complex or the topic too difficult? You should eliminate questions that are too difficult.

You should also remove questions that are too easy, ones that almost everyone answers correctly. Personally, I leave a few in my exams to boost student confidence. But from a psychometric perspective, this is probably bad advice.

Most questions should be of moderate difficulty. The literature differs on what this means, but I aim for questions where between 50 and 70 percent of the class answer correctly. One point of note is that the ideal difficulty (from a psychometric perspective) is 50 percent. This would also mean your class average would be 50 percent. My students would revolt if the class average were 50 percent.

2. Assessing question discrimination

The discrimination factor (DF) (also known as the discrimination index) is a score between –1 and +1. The DF measures whether a question effectively separated skilled examinees from unskilled examinees. The higher the DF score for a question, the better.

A high positive DF means that examinees who scored well on the question also scored well on the exam while those who scored poorly on the question also scored poorly on the exam. A high negative DF indicates the reverse situation.

Ideally, your exam questions should have high DF values; however, there are times when it is appropriate to include questions that do not. If you want to assess whether everyone learned something critical, you might include a question that tests this key concept. The question shouldn’t separate students from each other. If many students get it wrong, you may want to revisit how the material is taught in class.

If a question has a negative DF, your good students are getting it wrong while your weaker students are getting it right. Perhaps the question is tricky or your good students are overthinking it. Remove or remedy any question with a negative DF.

Questions with a DF of less than 0.2 discriminate poorly; that is, the correlation between a student’s score for the question and the rest of the exam is weak. These questions require improvement. Questions with DFs greater than 0.5 are doing an excellent job at rewarding your best students. Good students are getting these questions right and weaker students are getting them wrong.

3. Analyzing distractors

Better distractors will lead to better DFs. You want most students to choose the keyed response and to distribute the incorrect responses across the distractors. If students never select some distractors, remove or replace these. It is better to have fewer distractors than to have distractors that are implausible or rarely selected. Watch for questions for which most of the class chooses the wrong answer! This could mean your keyed response is wrong or that the question is poorly designed.

Let’s look at three examples of item analysis.

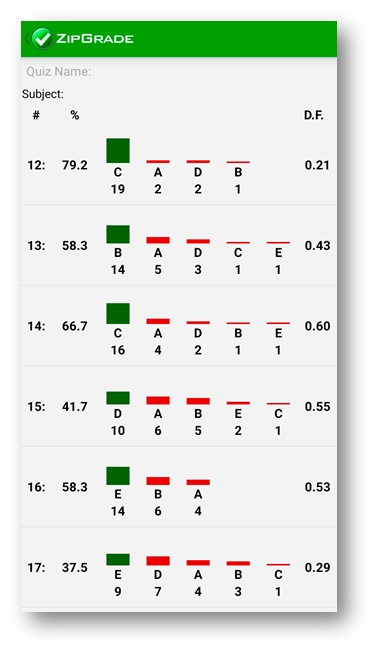

Example 1

Question 12: This question is too easy because nearly 80 percent of students answered it correctly. No one chose distractor E; thus, it could be eliminated. All distractors for this question should be revisited and revised to improve plausibility.

Question 16: While discrimination is good, no one chose C or D.

Takeaway: Review the questions to make the problematic distractors more plausible.

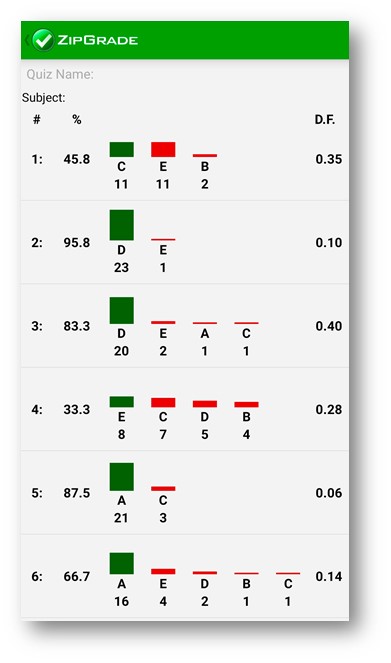

Example 2

Questions 2, 3, and 5 are too easy because the percentage of students answering them correctly is well above 80. Questions 2 and 5 also don’t discriminate well (DF < 0.2). Despite being easy, question 3 discriminates reasonably well, so it probably could be left as is.

Questions 1 and 4 are probably too difficult. We should look at both questions to investigate why students answered them so poorly. Both have a DF > 0.2, so they may be useful to separate top students from each other.

Question 6 has a low DF despite an appropriate difficulty. The distractors also are well distributed. It may be worth revisiting this question to see whether wording could be improved as the question itself may be poorly written.

Example 3

Question 14 is an excellent question.

About 67 percent of the class got this right. The DF is 60 percent. Incorrect responses across all distractors. You want many questions like question 14.

Ten tips to improve your exams

In their seminal, widely cited paper, Haladyna et al. (2002) made 31 recommendations to develop better exams. This paper is well worth reading in its entirety. Here are 10 of their recommendations:

- Align test questions to learning outcomes. Create a test plan and think about which learning outcomes you want to measure and to what depth.

- Test on novel material to measure understanding and application. Don’t repeat exact wording from study material. Otherwise you’ll be testing recall and aiding examinees who search for solutions online.

- Ensure that each question tests a single learning outcome. While it is tempting to test more than one learning outcome in a question, if an examinee gets it wrong, you won’t know which learning outcome led to the incorrect answer.

- Avoid trick questions. Trick questions involve stems that are overly complicated, including too much irrelevant detail or using words that are ambiguous or complex. Keep your questions focused and to the point.

- Proofread your exams. Test creators often pay more attention writing their keyed response (the right answer) than the distractors. A good test-taker might look for grammar errors to eliminate possible incorrect options.

- Keep options homogeneous in content and grammatical structure. Avoid using grammar changes between the stem and the response to provide clues to the correct answer.

- Keep the length of options about the same length. Don’t make the correct answer the longest or shortest response. Distractors that are both lengthy and plausible can be challenging, yet quite fun to write!

- Avoid options that give clues to the right answer. The literature is a bit mixed on this topic. As a guideline, avoid negative words, such as not or except. Consider asking “Which of the following is” instead of “Which of the following is not.”

- Make distractors plausible and include typical examinee errors when writing distractors. If no one chooses a particular distractor, it isn’t adding any value to your test! Distractors can be based on common student errors, linked to common misconceptions, or use familiar keywords that are semantically related but incorrect. One tactic is to make statements that are true but unrelated to the question being asked. Another approach is to review your open-ended questions to look for common student errors. These make for excellent distractors.

- Use humor in your exam only if it’s compatible with your teaching style. If you don’t use humor in the classroom, your exam is probably not the best place to crack a joke.

What about “none of the above” (NOTA) questions?

A common practice by instructors is to include NOTA distractors in their multiple-choice questions.

According to Blendermann et al. (2020),

When an initial multiple-choice item uses NOTA as an incorrect alternative . . . retention of tested information tends to be as good as standard (non-NOTA)

multiple-choice items. However, multiple-choice items become much more difficult to answer correctly when the NOTA choice is correct and later retention suffers as a consequence.

Students learn by taking our exams. NOTA distractors, whether correct or incorrect, can interfere with the learning process.

Distractor best practices

Increasing the number of distractors does not improve multiple-choice questions. Rodriguez (2005) did an exhaustive look at 80 years of research and concluded that the best balance between quality and efficiency is only three options: the correct answer and two plausible distractors.

By including fewer options, you can ask a larger number of multiple-choice questions in the same amount of time, which improves exam reliability. It’s better to have fewer plausible distractors than to add an extra one for the sake of having it there. If a student is unlikely to pick a distractor, don’t put it on the exam.

Conclusion

The literature provides useful guidance to help you create or select effective exam questions. Try creating questions in your LMS to assess your students. By reviewing your item analysis report from whatever LMS you use, you can continue to improve your exam questions and your exams.

References

Blendermann, M. F., Little, J. L., & Gray, K. M. (2020). How “none of the above” (NOTA) affects the accessibility of tested and related information in multiple-choice questions. Memory, 28(4), 473–480. https://doi.org/10.1080/09658211.2020.1733614

Butler, A. C. (2018). Multiple-choice testing in education: Are the best practices for assessment also good for learning? Journal of Applied Research in Memory and Cognition, 7(3), 323–331. https://doi.org/10.1016/j.jarmac.2018.07.002

Haladyna, T. M., Downing, S. M., & Rodriguez, M. C. (2002). A review of multiple-choice item-writing guidelines for classroom assessment. Applied Measurement in Education, 15(3), 309–333. https://doi.org/10.1207/S15324818AME1503_5

Rodriguez, M. C. (2005). Three options are optimal for multiple-choice items: A meta-analysis of 80 years of research. Educational Measurement: Issues and Practice, 24(2), 3–13. https://doi.org/10.1111/j.1745-3992.2005.00006.x

Further reading

Case, S., & Swanson, D. (2002). Constructing written test questions for the basic and clinical sciences. National Board of Examiners.

Gierl, M. J., Bulut, O., Guo, Q., & Zhang, X. (2017). Developing, analyzing, and using distractors for multiple-choice tests in education: A comprehensive review. Review of Educational Research, 87(6), 1082–1116. https://doi.org/10.3102/0034654317726529

Klender, S, Ferriby, A., & Notebaert, A. (2019). Differences in item statistics between positively and negatively worded stems on histology examinations. HAPS Educator, 23, 476–486. https://doi.org/10.21692/haps.2019.025

Parkes, J., & Zimmaro, D. (2016). Learning and assessing with multiple-choice questions in college classrooms. Routledge. https://doi.org/10.4324/9781315727769

Alym A. Amlani, CPA, CA, MPAcc, is a business instructor at Kwantlen Polytechnic University, Langara College, and the University of British Columbia. He teaches financial accounting, cost accounting, financial modeling, information systems, and data analytics.