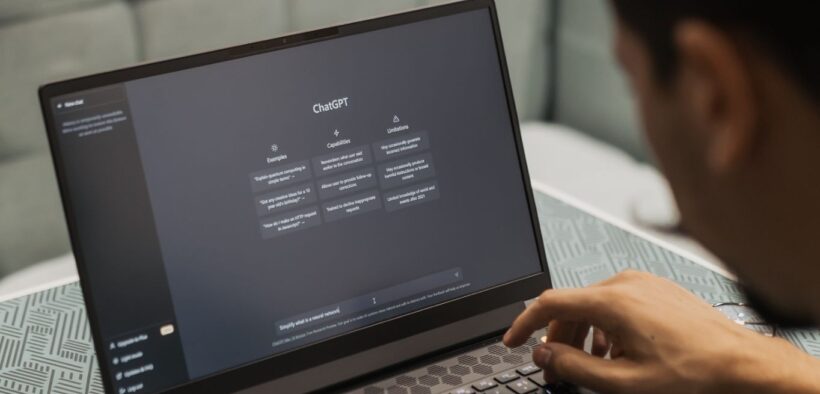

The arrival of ChatGPT sent shockwaves across academia as articles with titles like “Yes, We Are in a (ChatGPT) Crisis” splashed across higher education media. Reports of students using it to write their papers led to the immediate goal of keeping students away from AI.

Then a counter movement started when instructors realized they could use AI to cut time off their tasks. Articles came out of how to use AI to create lessons, provide feedback to students, generate assessments, write video scripts, and other time-saving tasks. Institutions have also been using AI in chatbots to answer student questions for a couple of years.